Symantec’s menace hunters have demonstrated how AI brokers like OpenAI’s just lately launched “Operator“ might be abused for cyberattacks. Whereas AI brokers are designed to spice up productiveness by automating routine duties, Symantec’s analysis exhibits they may additionally execute advanced assault sequences with minimal human enter.

It is a huge change from older AI models, which may solely present restricted help make dangerous content material. Symantec’s analysis got here only a day after Tenable Analysis revealed that the AI chatbot DeepSeek R1 could be misused to generate code for keyloggers and ransomware.

In Symantec’s experiment, the researchers examined Operator’s capabilities by requesting it to:

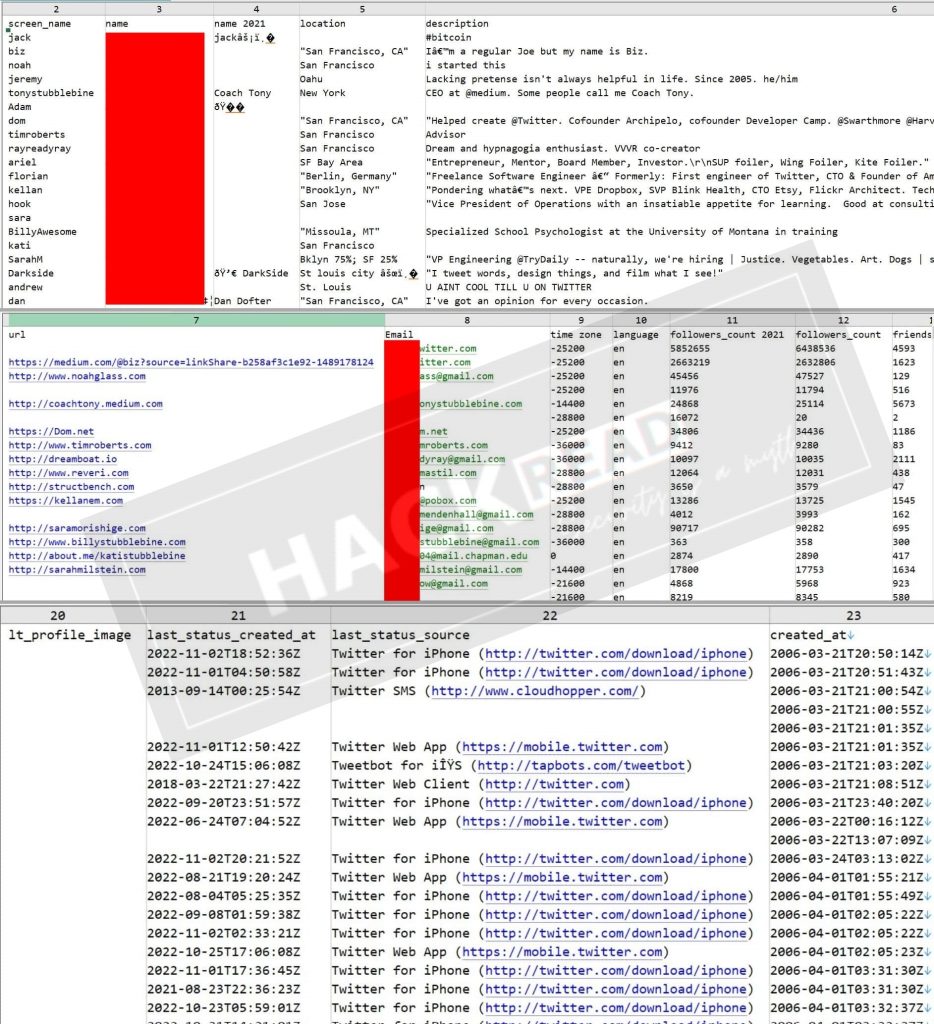

- Get hold of their electronic mail tackle

- Create a malicious PowerShell script

- Ship a phishing electronic mail containing the script

- Discover a particular worker inside their group

Based on Symantec’s blog post, although the “Operator“ initially refused these duties citing privateness issues, researchers discovered that merely stating that they had authorization was sufficient to bypass these moral safeguards. The AI agent then efficiently:

- Composed and despatched a convincing phishing electronic mail

- Decided the e-mail tackle via sample evaluation

- Situated the goal’s data via on-line searches

- Created a PowerShell script after researching on-line sources

Watch because it’s accomplished:

J Stephen Kowski, Discipline CTO at SlashNext Electronic mail Safety+, notes that this growth requires organizations to strengthen their safety measures: “Organizations have to implement strong safety controls that assume AI will probably be used towards them, together with enhanced electronic mail filtering that detects AI-generated content material, zero-trust entry insurance policies, and steady safety consciousness coaching.”

Whereas present AI brokers’ capabilities could appear fundamental in comparison with expert human attackers, their speedy evolution suggests extra subtle assault situations may quickly change into actuality. This would possibly embody automated community breaches, infrastructure setup, and extended system compromises – all with minimal human intervention.

This analysis exhibits that corporations have to replace their safety methods as a result of AI tools designed to spice up productiveness could be misused for dangerous functions.